A User-Centric Energy-Saving Method for Dynamic 5G Heterogeneous Networks Using Deep Reinforcement Learning

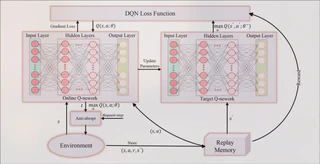

Energy consumption (EC) represents a significant challenge for 5G and 6G mobile networks, necessitating a primary focus on optimizing energy savings (ES). This paper illustrates the practical benefits of a user-centric deep reinforcement learning (DRL) models in achieving a green cellular network. The primary objective is to optimize energy usage in a heterogeneous network (HetNet). The optimization of power consumption (PC) in such networks is a non-convex and NP-hard problem. To address this challenge, we propose using reinforcement learning (RL). Due to the extensive state and action space, classical RL approaches are unsuitable. Therefore, the adoption of DRL methods, notably the deep Q-network (DQN) and deep deterministic policy gradient (DDPG) methods, is necessary. The proposed approach entails a user-centric connection establishment, whereby small base stations (SBSs) are switched to an on mode. The mode switching determined by the DRL methods is controlled by an anti-abrupt transition mechanism, which prevents unnecessary oscillations in the network. The results are benchmarked against existing approaches, specifically genetic algorithm (GA) and particle swarm optimization (PSO) for ES. The proposed methods outperform both GA and PSO optimization techniques in terms of ES and significantly reduce time consumption, enhancing its practical implementation feasibility.